AI Chatbot with a Knowledge Base

- Sairam Penjarla

- Oct 25, 2024

- 5 min read

Updated: Nov 30, 2024

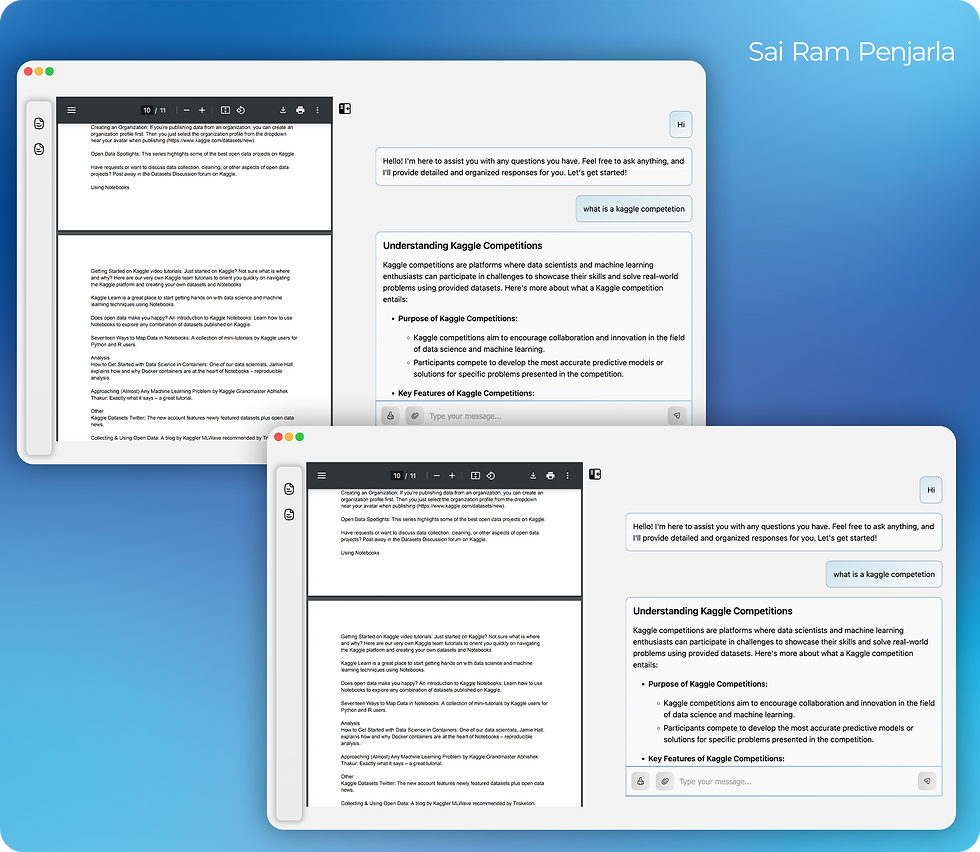

Welcome to my blog! Today, we’ll dive into an exciting project called AI Chatbot with a Knowledge Base. This project enables users to interact with a chatbot backed by a knowledge base populated with their own PDF documents.

👉 Watch this video to learn more about the project: https://youtu.be/lq8kC6pAfsQ

About Me

Hi, I’m Sai Ram, a data scientist specializing in:

Computer Vision

Natural Language Processing

Generative AI

On this website, I share cutting-edge data science projects you can use to improve your skills and build an impressive portfolio.

Be sure to check out my YouTube channel, where I post all my projects and detailed tutorials.

If you’re a beginner, explore my Roadmap to kickstart your data science journey, whether as a Data Scientist, Data Engineer, or Data Analyst. My blogs also cover essential topics like installing Python, Anaconda, using virtual environments, and Git basics.

Theory: Understanding the AI Chatbot with a Knowledge Base

This project revolves around Generative AI and the concept of integrating a chatbot with a knowledge base. Here's an overview:

Generative AI: This refers to AI systems capable of creating new content, such as text or images. The chatbot in this project is powered by an advanced LLM (Large Language Model).

OpenAI and LLMs: We utilize OpenAI’s capabilities to generate conversational responses.

LangChain and Knowledge Base: By combining OpenAI’s model with LangChain, we connect the chatbot to a knowledge base, enabling it to provide informed answers.

Embeddings: The project uses the sentence-transformers/all-MiniLM-L6-v2 model to generate embeddings from uploaded PDFs. These embeddings store the semantic meaning of the content.

ChromaDB: A vector database that manages these embeddings, allowing the chatbot to retrieve relevant information.

The result? A chatbot that understands and answers questions based on the uploaded PDFs.

Project Implementation

This project allows users to:

Upload PDFs to populate the knowledge base with company data.

Chat with the chatbot to retrieve relevant information.

Manage the knowledge base by adding or clearing documents.

View a preview of uploaded files in the UI.

Store and reload previous conversations using an SQLite database.

Core technologies:

Frontend: Flask APIs for rendering.

Backend: OpenAI for LLM, ChromaDB for storage, and SQLite for conversations.

Code Walkthrough

1. Importing Required Libraries

Explanation:

The first section imports necessary libraries and modules for the project:

os and uuid handle file system operations and generate unique identifiers.

Utilities and ConversationDB are custom modules to manage utility functions and conversation databases.

BOT_RESPONSE_GUIDELINES is a template for chatbot responses.

Flask components like Flask, Response, jsonify, render_template, and request are used to create the web application and handle HTTP requests.

Code Block:

import os

import uuid

from src.utils import Utilities, ConversationDB

from src.prompt_templates import BOT_RESPONSE_GUIDELINES

from flask import (

Flask,

Response,

jsonify,

render_template,

request

)

Summary:

This block sets up the required imports for file handling, chatbot logic, and the Flask framework.

2. Initializing the Flask App and Utilities

Explanation:

Initializes the Flask app, conversation database (conv_db), and utilities (utils).

Sets up a default conversation in bot_conversation.

Ensures a directory exists (static/uploaded_pdfs) for storing uploaded PDF files.

Code Block:

app = Flask(__name__)

conv_db = ConversationDB()

utils = Utilities()

bot_conversation = utils.get_default_conversation()

UPLOAD_FOLDER = "static/uploaded_pdfs"

os.makedirs(UPLOAD_FOLDER, exist_ok=True)

Summary:

This block initializes the web application, sets up utilities, and prepares a folder for uploaded PDF files.

3. Landing Page Route

Explanation:

Defines the root route (/) to render the landing page.

Retrieves previous conversations from the database and lists all PDF files in the UPLOAD_FOLDER.

Passes conversations and PDF file paths to the index.html template for rendering.

Code Block:

@app.route("/")

def landing_page():

# Retrieve conversations

conversations = conv_db.retrieve_conversations()

# List all PDF files in the UPLOAD_FOLDER

pdf_files = []

if os.path.exists(UPLOAD_FOLDER):

pdf_files = [

os.path.join(UPLOAD_FOLDER, f)

for f in os.listdir(UPLOAD_FOLDER)

if f.endswith('.pdf')

]

return render_template("index.html", conversations=conversations, pdf_files=pdf_files)

Summary:

This route displays the landing page with past conversations and uploaded PDF file previews.

4. Uploading and Indexing PDFs

Explanation:

Handles file uploads via POST requests to /create_vector_index.

Validates the uploaded file and ensures it is a PDF.

Generates a unique filename and saves the file in UPLOAD_FOLDER.

Processes the file using utils.read_documents() to extract content and create vector embeddings.

Code Block:

@app.route('/create_vector_index', methods=['POST'])

def create_vector_index():

if 'pdf' not in request.files:

return jsonify({'success': False, 'error': 'No file part'}), 400

file = request.files['pdf']

if file.filename == '':

return jsonify({'success': False, 'error': 'No selected file'}), 400

if file and file.filename.endswith('.pdf'):

try:

unique_filename = f"{uuid.uuid4()}_{file.filename}"

file_path = os.path.join(UPLOAD_FOLDER, unique_filename)

# Save the file

file.save(file_path)

with open(file_path, 'rb') as pdf_file:

pdf_bytes = pdf_file.read()

utils.read_documents(pdf_bytes)

return jsonify({'success': True, 'file_path': file_path})

except Exception as e:

return jsonify({'success': False, 'error': str(e)}), 500

return jsonify({'success': False, 'error': 'Invalid file type'}), 400

Summary:

This route allows users to upload PDF files, processes them into vector embeddings, and provides feedback on the operation's success.

5. Chatbot Interaction

Explanation:

Accepts user input via POST requests to /invoke_bot.

Queries the knowledge base (utils.query_chromadb()) if files are attached.

Builds a conversation message using utils.get_user_msg().

Streams the LLM’s output as the bot response using utils.invoke_llm_stream().

Code Block:

@app.route('/invoke_bot', methods=['POST'])

def invoke_bot():

data = request.get_json()

user_input = data.get('user_input')

file_is_attached = data.get('file_is_attached')

CONTENT = utils.query_chromadb(user_input) if file_is_attached else ""

msg = utils.get_user_msg(

content = CONTENT,

conversations = bot_conversation,

present_question = user_input + BOT_RESPONSE_GUIDELINES,

)

bot_output = utils.invoke_llm_stream(conversations = bot_conversation + [msg])

return Response(bot_output, content_type='text/event-stream')

Summary:

This route facilitates chatbot interactions by integrating the LLM with the knowledge base and streaming responses.

6. Deleting Conversations

Explanation:

Handles DELETE requests to /delete_conversations to clear all conversations from the SQLite database.

Code Block:

@app.route('/delete_conversations', methods=['DELETE'])

def delete_conversations():

"""Delete all conversations from the database."""

conv_db.delete_all_conversations()

return jsonify({"success": True, "message": "All conversations deleted."})

Summary:

This route clears stored conversations, ensuring users can reset their chat history.

7. Updating Conversations

Explanation:

Handles POST requests to /update_conversation to append user and bot messages to the conversation history.

Stores updated conversations in the database for persistence.

Code Block:

@app.route('/update_conversation', methods=['POST'])

def update_conversation():

data = request.get_json()

user_input = data.get('user_input')

llm_output = data.get('llm_output')

flow_index = data.get('flow_index')

conversation_index = data.get('conversation_index')

bot_conversation.append({"role" : "user", "content" : user_input})

bot_conversation.append({"role" : "assistant", "content" : llm_output})

conv_db.store_conversation(user_input, llm_output, flow_index, conversation_index)

return jsonify({"success" : True})

Summary:

This route synchronizes user edits with the conversation history in the database.

8. Running the Application

Explanation:

Sets up the Flask application to run on 0.0.0.0 at port 8000.

Code Block:

if __name__ == "__main__":

app.run(host="0.0.0.0", port=8000)

Summary:

Starts the web server, making the application accessible locally or on a network.

Project Setup Instructions

Clone the repository:

git clone <https://github.com/sairam-penjarla/pdf-rag-ai-chatbot> cd pdf-rag-ai-chatbot

Create a virtual environment: Learn VirtualEnv Basics.

python -m venv env source env/bin/activate # For Linux/Mac env\\\\Scripts\\\\activate # For Windows

Install dependencies:

pip install -r requirements.txt

Run the project:

python app.py

Access the app at http://localhost:8000.

Conclusion

This project bridges the gap between LLMs and custom knowledge bases, making it perfect for businesses looking to create personalized AI solutions.

If you enjoyed this blog, check out other projects on my website and YouTube channel.

Follow me on:

Happy Coding!